- HOW TO INSTALL APACHE SPARK ON WINDOWS 7 SOFTWARE

- HOW TO INSTALL APACHE SPARK ON WINDOWS 7 CODE

- HOW TO INSTALL APACHE SPARK ON WINDOWS 7 LICENSE

- HOW TO INSTALL APACHE SPARK ON WINDOWS 7 ZIP

The process to set up the environmental variables on your machine will be similar for Spark as it is for the other variables below, where you provide a variable name, variable value and add it to the Path variable. Sys.setenv(Variable Name = "Variable Value")

HOW TO INSTALL APACHE SPARK ON WINDOWS 7 CODE

The code to do it in R is below, however, I will discuss setting up on your machine next. NOTE: if you decide to set the environmental variables through R, you need to remember that it does not change them on your machine, nor does it flow through to the next session unless they are establish in your. Step #2: Set up system environmental variables to run Spark (either in windows, or R): Once you have unzipped the file it should look like this:

HOW TO INSTALL APACHE SPARK ON WINDOWS 7 SOFTWARE

Extract all the files to this location using decompression software like PeaZip or WinZip. I would put this in your “C:Apps\winutils” folder so you can reference it easily (you will need it later).

HOW TO INSTALL APACHE SPARK ON WINDOWS 7 ZIP

Click on the green button (“clone or download”) on the upper right of the page and download the zip file. You can download the winutils software from the github repo here. I am not really sure what it does, but if you want more information here is the ApacheWiki. Not many blog posts would talk about installing this software.

This step tripped me up for a long time when I first started learning about Spark. You will need to know this path to create the JAVA_HOME environmental variable. When you install Java, pay attention to the folder location of the installation. I feel like this works best to reduce future issues. Unlike Spark, I will let the system determine where to install Java.

HOW TO INSTALL APACHE SPARK ON WINDOWS 7 LICENSE

You have to accept their license agreement before downloading. You can download the most recent version for Windows at this link. I was able to use the most recent version of Java 8. I changed/shortened the Spark folder name for simplicity and ease of typing.Īs of this writing, Java 9 (I haven’t test Java 10) has been having a ton of issues and is not stable. This make it easy to transition to another laptop as all I have to do is go down the list installing software. I have an Apps (“C:\Apps”) folder where I put all the software that I download and install.

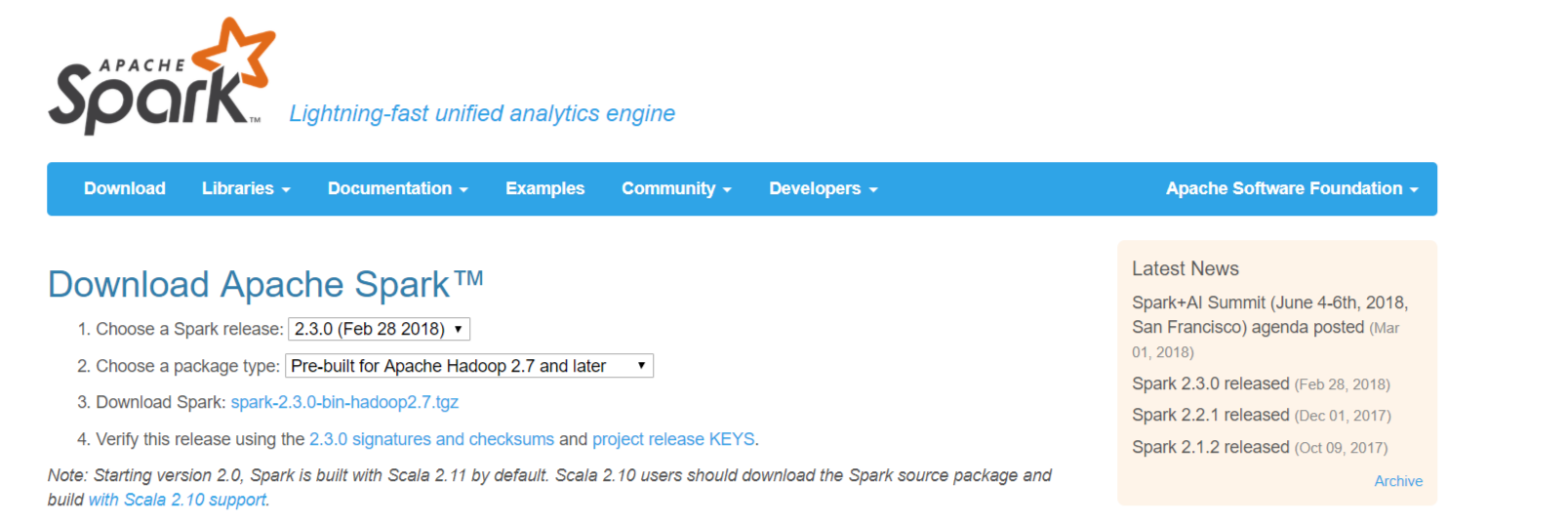

I downloaded Spark 2.3.0 Pre-built for Apache Hadoop 2.7 and later.Īfter downloading, save the zipped file to a directory of choice, and then unzip the file. Spark can be downloaded directly from Apache here. Note, as of this posting, the SparkR package was removed from CRAN, so you can only get SparkR from the Apache website. Otherwise you can use WinZip or WinRAR.First you will need to download Spark, which comes with the package for SparkR. If you have Cygwin or Git Bash, you can use the command below. For the package type, choose ‘Pre-built for Apache Hadoop’. (1) Go to the official download page and choose the latest release. If you are stuck with Spark installation, try to follow the steps below. Spark installation can be tricky and the other web resources seem to miss steps. You can also run Spark code on Jupyter with Python on your desktop. It is also handy for debugging if you can just run it on your local machine. When I develop with Spark, I typically write code on my local machine with a small dataset before testing in on a cluster. You can simply install it on your machine. To play with Spark, you do not need to have a cluster of computers.

However, as Spark goes through more releases, I think the machine learning library will mature given its popularity. In terms of machine learning, I found the performance and development experience of MLlib (Spark’s machine learning library) is very good, but the methods you can choose are limiting. For most of the Big Data use case, you can use other supported languages. If you have a large binary data streaming into your Hadoop cluster, writing code in Scala might be the best option because it has the native library to process binary data. For example, you can write Spark on the Hadoop clusters to do transformation and machine learning easily. Spark is easy to use and comparably faster than MapReduce. It also has multi-language support with Python, Java and R. Apache Spark is a powerful framework to utilise cluster-computing for data procession, streaming and machine learning.

0 kommentar(er)

0 kommentar(er)